Still Playing Games: Mobile SoC Follow-ups Should be Standard

/The topic of honesty in the world of mobile performance benchmarks can draw pursed lips and awkward glances when brought up in a meeting involving a silicon SoC provider. It’s not a secret that gaming the system has been a frequent area of concern since phone reviews became an intrinsic part of the purchasing process for many consumers, but it isn’t a popular subject. Some of the most-respected in media showed back in 2013 that nearly everyone involved in the process had some portion of blame, resulting in dishonest scores and performance metrics that led to ill-informed customers.

A similar, but more nuanced, discussion can be had about SoC and handset performance over time. Thiscan take two angles: first, performance of benchmarks in long-term usage scenarios (sustained performance); and second, performance of phones through software and firmware updates released weeks, months, and even years later.

The first vector of performance during extended use is something that the media and analyst groups have the ability to evaluate today, and this should be more often to accurately measure the real-world experience of users. Take a benchmark like GFXBench, and its Manhattan sub-test. This benchmark is one of the most referenced by silicon and phone vendors, but it only runs for 62 seconds. A typical gaming session for a user of a high-end smartphone might last closer to 62 minutes. That’s on the high end of scenarios, certainly, but the average is closer to 10 minutes by many estimates. Clearly, sustained performance is an important metric.

This data shows four of the most popular flagship smartphones on the market running 3DMark Slingshot ES 3.0, through multiple iterations, and at 1080p resolution. Each benchmark run takes about 3.5 minutes, giving us an estimated 38 minutes of consecutive gaming time. As the SoC and GPU runs at top clock speeds, the phone heats up, generally forcing clock speeds down over time to maintain a comfortable surface temperature and to reduce the strain on the battery. (Higher average SoC temperatures result in non-linear increases in power use.)

A couple of things stand out in the results above. First, the iPhone 7 Plus has the most dramatic drop in performance from run 1 to run 2 (-15%), with a drop of more than 25% at the end of run 11. The Google Pixel sees a decrease in 3DMark score of about 9% over the entire run but the Pixel XL, with its larger surface area and added room for passive cooling show more consistent results; it only decreases its score by 4.9%. The Huawei Mate 9 drops by almost 12% and settles to that spot by run 4, representing 12+ minutes of gaming time.

So what number is best to report from a review and recommendation stand point? It is easier to show peak performance from a benchmark, but that does not represent the actual gaming experience of users who will be using the device for more than the 2-3 minutes of a single 3DMark Slingshot run. All four of these phones show near consistent performance starting at run 4, or minute 12+. Testing this way takes more time and more effort, and this aspect will vary based on the test and benchmark program being used.

The second vector of performance over time is just that: long-term use. Normally when we think of changes in phone behavior over time, we only focus on battery life. It’s well known that a battery on day one will last longer than on day 365 but it’s nearly impossible simulate that degradation without detailed information about the battery chemistry used by each vendor and how previous batteries of that type have behaved. Quick charging and consumer patterns will impact results as well, complicating things even further.

While working on the Mate 9 review over at PC Perspective, Sebastian Peak pointed out an interesting fact to me that raised interest for a follow up. GFXBench.com shows the global collection of benchmarks and data from its tests in a browsing format and sorts by both best scores (highest from that phone and hardware and median scores (midpoint of the distribution). The Mate 9 score for Manhattan 1080p offscreen is currently 54.9 FPS when sorted by best but is only 36.8 FPS when sorted by median. While this might appear to be a result of the first vector discussed above, the highest result we have seen with the Mate 9 hardware in house is ~38 FPS, even when the phone had been idle for multiple minutes. (For comparison, the Pixel XL shows a frame rate 49.1 FPS sorted by best and 47.2 when sorted by median.)

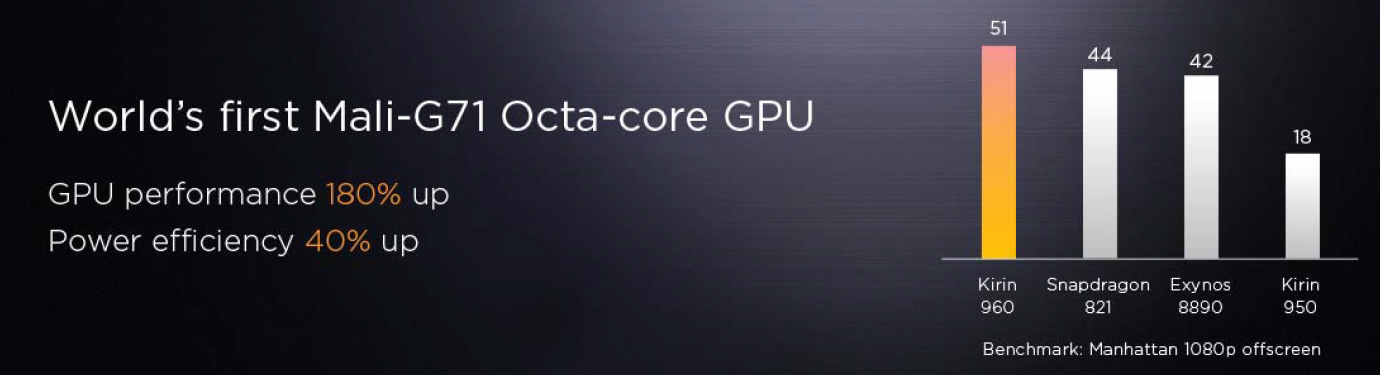

Going back to the initial launch of the Kirin 960 SoC from HiSilicon, the chip used in the Huawei Mate 9 smartphone, the vendor claimed performance in the GFXBench Manhattan 1080p benchmark of 51 FPS.

Considering our testing today, this seems like an impossible claim.

Early reviews of the Mate 9 showed impressive graphics performance from the flagship Huawei device and the Kirin 960 SoC, with some Manhattan benchmark results close to the quoted 51 FPS from the launch presentation from HiSilicon.

Early in the year, a firmware update was pushed to the Mate 9 that addressed “daily use” functions, extending battery life and lowering the surface temperatures on the exterior. Though not stated on any release notes or documentation, the only way to lower temperatures on a passively cooled phone is to lower clock speeds. Lower clock speeds mean lower performance.

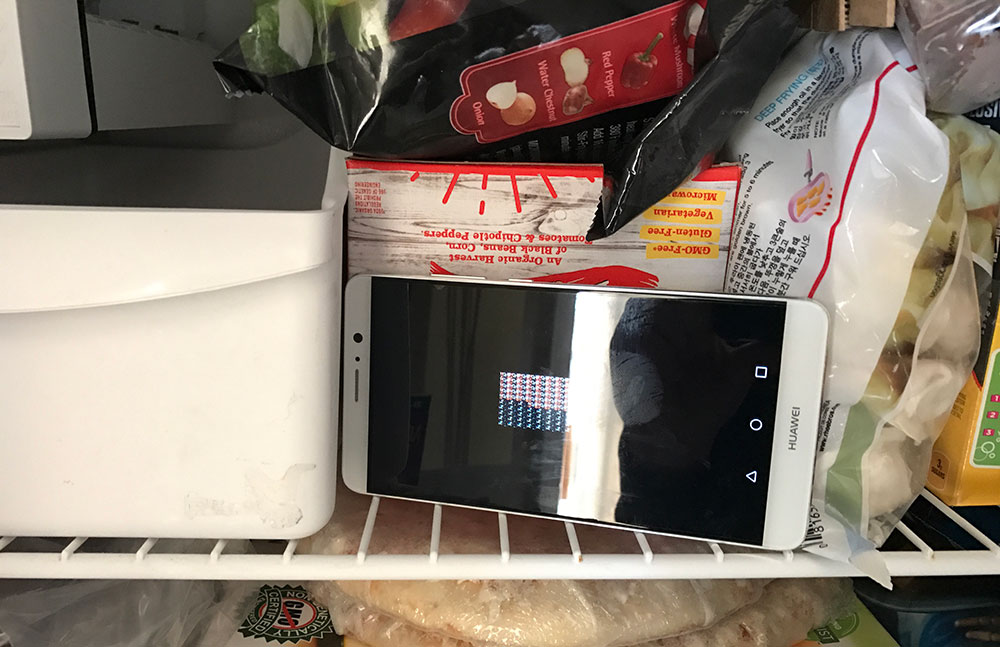

To ensure that thermal constraints were removed or alleviated, and see see how this was reflected in performance, if at all, we put our Mate 9 smartphone in the freezer.

While in the freezer, we ran the Manhattan 1080p ES 3.0 test five times in succession, for a total of ~12 minutes. Just before running our fifth loop of the benchmark, surface temperature of the Mate 9 was 34F. The result was a score of 54 FPS; nearly perfectly matching the “best” scores from the GFXBench.com site.

Regardless of why or how, the stated performance of the Kirin 960 is significantly lower than the measured real-world performance through our own testing. HiSilicon and Huawei are far from the only vendors guilty of these kinds of games and post-release adjustments, but this situation is a perfect example to highlight the necessary changes in performance and product evaluation that the mobile market needs to undergo. Too many reviews are glancing in nature, focused on speed of release rather than accuracy, refusing to dive deep into the technology and its integration, to the disservice of the audience that spends hundreds of dollars per device.

Follow-up tests to monitor the lifetime performance of a phone is another aspect that could be integrated into mobile device testing; but stories on phones that are months or years old are not attractive to advertisers or to the hardware providers themselves, who would prefer to focus attention on updated, flagship products. Proposing a standard check-up with device hardware 3 months after release should be part of the standard checks and balances system between manufacturers, reviewers and consumers.